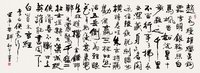

第55课:

1、程序暂停的方法

import time

....time.sleep(5)

2.使用代理

步骤:

1.参数是一个字典{'类型':'代理IP:端口号'}

proxy_support = urllib.request.ProxyHandler({})

2. 定制、创建一个opener

opener = urllib.request.build_opener(proxy_support)

3a. 安装opener

urllib.request.install_opener(opener)

3b. 调用opener

opener.open(url)

获得免费代理IP网站:http://www.xicidaili.com/

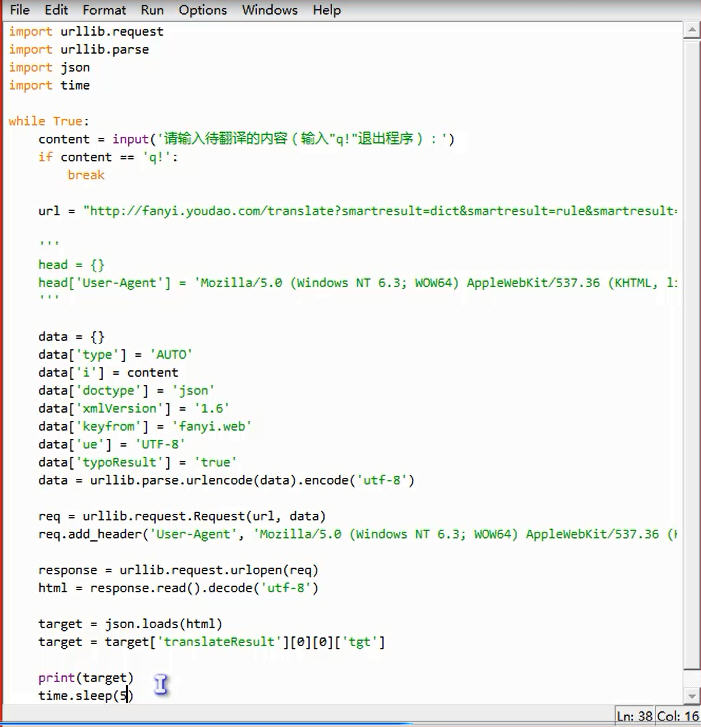

使用代理的源码:

import urllib.request

import random

url = 'http://www.whatismyip.com.tw/'

iplist = ['219.150.242.54:9999','218.56.132.158:8080','58.59.68.91:9797','123.138.216.91:9999']

proxy_support = urllib.request.ProxyHandler({'http':random.choice(iplist)})

opener = urllib.request.build_opener(proxy_support)

opener.addheaders = [('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.109 Safari/537.36')]

urllib.request.install_opener(opener)

response = urllib.request.urlopen(url)

html = response.read().decode('utf-8')

print(html)

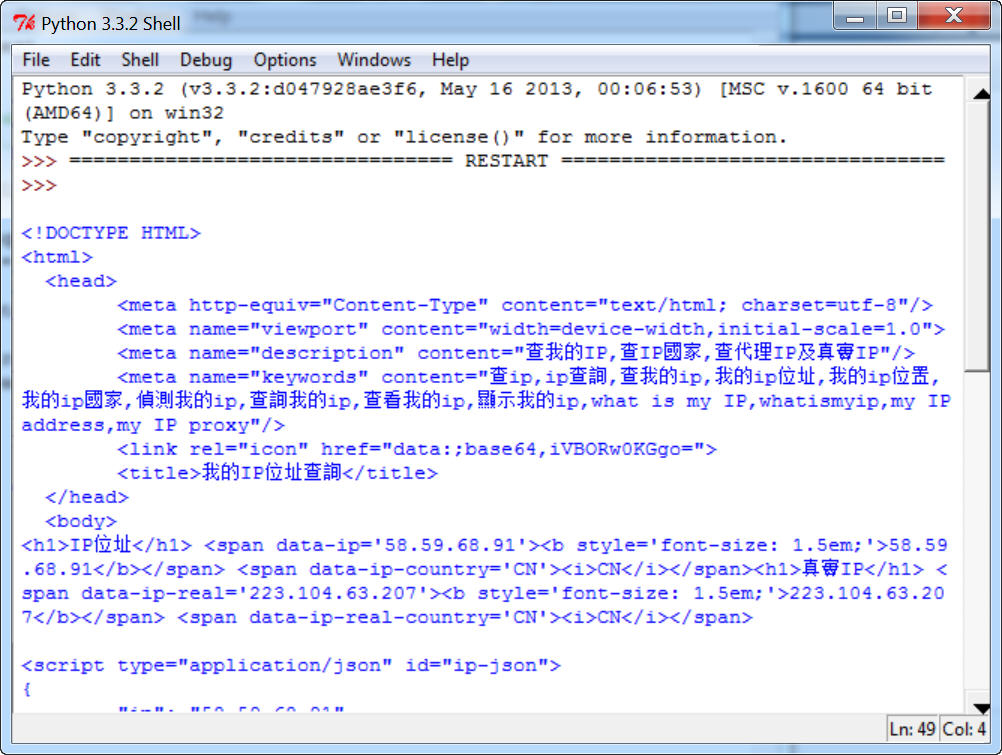

成果展示:

三、python3使用urllib

import urllib.request

def get_content():

html = urllib.request.urlopen("http://www.xx.com").read()

html html.decode('gdk')

print(html)

原载:蜗牛博客

网址:http://www.snailtoday.com

尊重版权,转载时务必以链接形式注明作者和原始出处及本声明。