一、使用

运行后会生成一个txt文件。

二、代码:

#encoding:utf-8

import struct

import binascii

class Baidu(object):

def __init__(self, originfile):

self.originfile = originfile

self.lefile = originfile + '.le'

self.txtfile = originfile[0:(originfile.__len__()-5)] + '.txt'

self.buf = [b'0' for x in range(0,2)]

self.listwords = []

#字节流大端转小端

def be2le(self):

of = open(self.originfile,'rb')

lef = open(self.lefile, 'wb')

contents = of.read()

contents_size = contents.__len__()

mo_size = (contents_size % 2)

#保证是偶数

if mo_size > 0:

contents_size += (2-mo_size)

contents += contents + b'0000'

#大小端交换

for i in range(0, contents_size, 2):

self.buf[1] = contents[i]

self.buf[0] = contents[i+1]

le_bytes = struct.pack('2B', self.buf[0], self.buf[1])

lef.write(le_bytes)

print('写入成功转为小端的字节流')

of.close()

lef.close()

def le2txt(self):

lef = open(self.lefile, 'rb')

txtf = open(self.txtfile, 'w')

#以字符串形式读取转成小端后的字节流,百度词典的起始位置为0x350

le_bytes = lef.read().hex()[0x350:]

i = 0

while i<len(le_bytes):

result = le_bytes[i:i+4]

i+=4

#将所有字符解码成汉字,拼音或字符

content = binascii.a2b_hex(result).decode('utf-16-be')

#判断汉字

if '\u4e00' <= content <= '\u9fff':

self.listwords.append(content)

else:

if self.listwords:

word = ''.join(self.listwords)

txtf.write(word + '\n')

self.listwords = []

print('写入txt成功')

lef.close()

txtf.close()

if __name__ == '__main__':

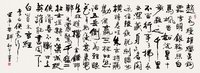

path = r'书法词汇大全【官方推荐】.scel'

bd = Baidu(path)

bd.be2le()

bd.le2txt()

三、采集百度搜索结果

import requests

import tldextract

import time

from lxml import etree

def sava_data(filename,content):

with open(filename,"a",encoding="utf-8") as f:

f.write(content + '\n')

def Redirect(url):

try:

res = requests.get(url,timeout=10)

url = res.url

except Exception as e:

print("4",e)

time.sleep(1)

return url

def baidu_search(wd,pn_max):

#百度搜索爬虫,给定关键词和页数以及存储到哪个文件中,返回结果去重复后的url集合

url = "https://www.baidu.com/s"

return_url = []

for page in range(pn_max):

pn = page*10

querystring = {"wd":wd,"pn":pn}

headers = {

'pragma': "no-cache",

'accept-encoding': "gzip, deflate, br",

'accept-language': "zh-CN,zh;q=0.8",

'upgrade-insecure-requests': "1",

'user-agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36",

'accept': "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

'cache-control': "no-cache",

'connection': "keep-alive",

}

try:

response = requests.request("GET", url, headers=headers, params=querystring)

#解析html

selector = etree.HTML(response.text, parser=etree.HTMLParser(encoding='utf-8'))

#根据属性href筛选标签

for i in range(1, 11):

context = selector.xpath('//*[@id="'+str(pn+i)+'"]/h3/a[1]/@href')

# title = selector.xpath('//*[@id="' + str(i) + '"]/h3/a/text()')

# title = "".join(title)

# print(len(context),context[0])

#跳转到获取的url,若可跳转则返回url

xurl = Redirect(context[0])

# return_url[title] = xurl

return_url.append(xurl)

# response.close()

except Exception as e:

print ("页面加载失败", e)

continue

return return_url

def get_root_domain(url):

val = tldextract.extract(url)

root_domain = val.domain + '.'+val.suffix

return root_domain

with open("keywordstest.txt", "r",encoding='utf-8') as f:

for line in f.readlines():

baidu_urls = baidu_search(line, 3)

for url in baidu_urls:

if "baidu.com" in url:

pass

else:

# print(url)

url = get_root_domain(url)

if url == ".":

pass

else:

print(url)

sava_data("rusult7.txt",url)

Updated on May-23-2020

三、强子的方法

1.强子在视频中讲的获取百度搜索结果中的真实网址的方法:

for i in urls:

req = urllib2.Request(i)

req.add_header('userg-agent','')

realurl = urllib.urlopen(req).geturl()

url = url.split('/')[-2] #获取根域名

参考:https://www.bilibili.com/video/BV1mW411v743?from=search&seid=3477489273158434483

2.还可以使用递归:

def getlist(keyword,domain,pn=0):

if pn < 50:

pn += 10

return getlist(keyword,domain,pn=pn)

这个人的许多python视频不错:

https://space.bilibili.com/266594796