一、原理

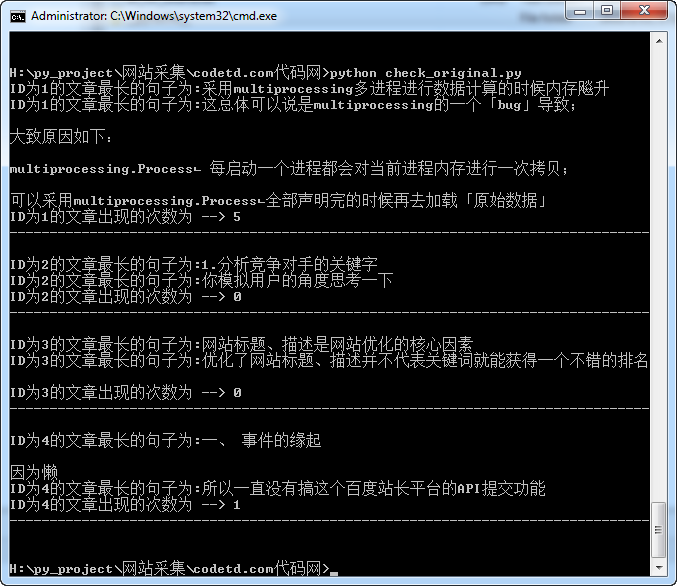

1.先把一篇文章,按逗号分隔成一个一个句子。(有点缺陷,比如如果句子中是冒号,分号,它认为是一句话。)

2.然后计算每个短语的字数,取字数最多的两句话。

3.拿上面两句话去百度搜索,并分别计算每句话在百度第一页搜索结果中的次数,然后加总。出现次数最少的文章,我们认为它的原创性要高一些。

因为若一个文章被其他网站大量转载,那么随便提取该文章中一个短语,都能在百度搜索出完全重复的内容:

二、成果展示

三、代码

#coding:utf-8

import requests,re,time,sys,json,datetime

import multiprocessing

import MySQLdb as mdb

def search(req,html):

text = re.search(req,html)

if text:

data = text.group(1)

else:

data = 'no'

return data

def date(timeStamp):

timeArray = time.localtime(timeStamp)

otherStyleTime = time.strftime("%Y-%m-%d %H:%M:%S", timeArray)

return otherStyleTime

def getHTml(url):

host = search('^([^/]*?)/',re.sub(r'(https|http)://','',url)) #后半部分是去除网址前缀,前半部分是得到www.baidu.com。

headers = {

"Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8",

"Accept-Encoding":"gzip, deflate, sdch",

"Accept-Language":"zh-CN,zh;q=0.8,en;q=0.6",

"Cache-Control":"no-cache",

"Connection":"keep-alive",

#"Cookie":"",

"Host":host,

"Pragma":"no-cache",

"Upgrade-Insecure-Requests":"1",

"User-Agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36",

}

# 代理服务器

proxyHost = "proxy.abuyun.com"

proxyPort = "9010"

# 代理隧道验证信息

proxyUser = "XXXX"

proxyPass = "XXXX"

proxyMeta = "http://%(user)s:%(pass)s@%(host)s:%(port)s" % {

"host" : proxyHost,

"port" : proxyPort,

"user" : proxyUser,

"pass" : proxyPass,

}

proxies = {

"http" : proxyMeta,

"https" : proxyMeta,

}

html = requests.get(url,headers=headers,timeout=10)

# html = requests.get(url,headers=headers,timeout=30,proxies=proxies)

code = html.encoding

return html.content

def getContent(word):

"""

比对进度搜索结果,返回word在搜索结果中出现的次数

"""

pcurl = 'http://www.baidu.com/s?q=&tn=json&ct=2097152&si=&ie=utf-8&cl=3&wd=%s&rn=10' % word

# print '@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ start crawl %s @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@' % pcurl

html = getHTml(pcurl)

a = 0

html_dict = json.loads(html) #百度结果是json格式,所以可以loads

for tag in html_dict['feed']['entry']:

if tag.__contains__('title'):

title = tag['title']

url = tag['url']

rank = tag['pn'] #排名。

time = date(tag['time'])

abs = tag['abs'] #摘要

# print(abs)

if word in abs:

a += 1

return a

con = mdb.connect('127.0.0.1','root','','python_test',charset='utf8')

cur = con.cursor()

with con:

cur.execute("select aid,content from article_content limit 5")

numrows = int(cur.rowcount)

for i in range(numrows):

row = cur.fetchone()

aid = row[0]

content = row[1]

content_format = re.sub('<[^>]*?>','',content)

a = 0

for z in [ x for x in content_format.split(',') if len(x)>10 ][:2]: #将文章按','分为一个个句子,取最长的两个句子。

print("ID为{}的文章最长的句子为:{}".format(aid,z))

time.sleep(5)

a += getContent(z)

print ("ID为{}的文章出现的次数为 --> {}".format(aid,a))

print("-"*80)

# words = open(wordfile).readlines()

# pool = multiprocessing.Pool(processes=10)

# for word in words:

# word = word.strip()

# pool.apply_async(getContent, (word,client ))

# pool.close()

# pool.join()